The CS program at my university requires all students to complete a group project of their choosing during the required software engineering course known as the senior design project to the wider college of engineering. My group consisted of 5 people with the goal of using an iRobot Create (like a Roomba, but without the vacuum) and a Microsoft Kinect to create a robot that would take gesture and voice commands and follow or avoid a person. The finished product looked as such:

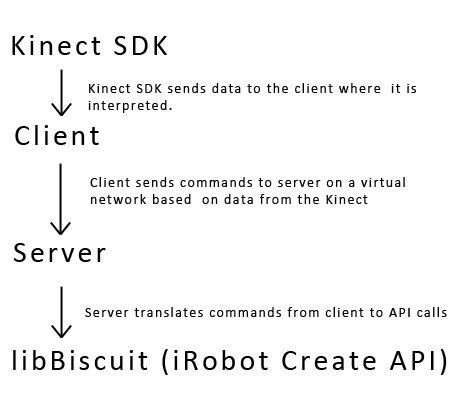

From a hardware point of view, the robot consisted of three major components. On the bottom is the iRobot Create and sitting on top of it was a Windows 8 tablet used and a Kinect. The Kinect talked to the tablet which ran our software to interpret the data from the Kinect and then send the appropriate commands to the Create. Therefore, the resulting software stack was as follows:

Deciding to go with a client/server model gave us a few advantages.

- It provided the possibility to easily extend the project to forms of control such as remotes running on a phone or tablet not attached to the robot.

- We could also have easily implemented the ability for multiple robots to interact with one another.

- We were able to implement the API in C, while using C# to interact with the Kinect SDK.

- It provided a natural separation between components that allowed us to divide the work within the group more easily.

On my end, I worked on creating the Create API called libBiscuit and the server (including defining a protocol for communication between the client and server). I discussed libBiscuit extensively in my blog post about it so I won’t write about it here. Three other group members took on the task of interacting with the Kinect and writing the client. They got creative and added several modes in addition to the voice and gesture commands. They are:

- Follow mode: Asimov follows a person around

- Avoid mode: Asimov avoids a person

- Center mode: Asimov turns to look at person as he or she moves around

- Drinking mode: Users sit in a circle and Asimov drives around inside the circle carrying a shot glass. When Asimov stops, the person closest to it must take the shot. Okay, so we didn’t have time to implement this, but it would have been a lot of fun to test!

Below is a video of Asimov in testing (and being held together with rubber bands!)